This section assumes the reader is already comfortable working with ROS and Gazebo. ROS (Robot Operating System) provides libraries and tools to help software developers create robot applications. It provides hardware abstraction, device drivers, libraries, visualizers, message-passing, package management, and more. Along with ROS, Gazebo is used for simulation. A well-designed simulator makes it possible to rapidly test algorithms, design robots, and perform regression testing using realistic scenarios. Gazebo offers the ability to accurately and efficiently simulate populations of robots in complex indoor and outdoor environments.

For a more detailed explanation of installing ROS, package dependencies, and setting up an Xbox controller for control inputs, see the ROS Integration – Development Environment Setup page. For information on Gazebo model files, launch files, sensor visualization, and world files, see the ROS Integration – Model Dynamics & Sensors page.

Moving beyond the simulation and into the real world, the DIY Autonomous Vehicle Project section describes the implementation of the FormulaPi lane tracking algorithms on an Elegoo vehicle with a RaspberryPi 3, Arduino Uno, and RaspberryPi camera.

Yetiborg Model Simulation

To simulate the Yetiborg vehicle from PiBorg, we used the Clearpath Robotics Husky vehicle model. Clearpath Robotics is a hardware and software manufacturer that provides unmanned vehicles, systems and solutions to industry leaders in over 35 countries around the world. They heavily support the open-source community and integrate ROS into the design of their robotic platforms.

The Husky platform most closely resembles the PiBorg Yetiborg robot. The Husky was developed to be an outdoor ready unmanned ground vehicle used for research and rapid prototyping. Husky fully supports ROS—all of the packages are available in the Husky github org. This section will describe the process of installing and operating the Husky robot in Gazebo. Then, the parameters of Husky model will be modified to more closely resemble the YetiBorg dimensions. Finally, the YetiBorg model will be incorporated into the FormulaPi track world, resulting in a complete simulation environment.

Install Required Dependencies

The first step is to download the base Husky packages from the Husky Github repository. This includes the following ROS packages used for simulation as well as operation of the real Husky robot.

- husky_control: Control configuration

- husky_description: Robot description (URDF)

- husky_msgs: Message definitions

- husky_navigation: Navigation configurations and demos

- husky_ur5_moveit_config: MoveIt configuration and demos

Next, download the Husky Gazebo package from the Husky Simulator repository on Github.

- husky_gazebo: Gazebo plugin definitions and extensions to the robot URDF.

Lastly, download the Husky Rviz package from the Husky Desktop repository on Github.

- husky_viz: Visualization configuration for Clearpath Husky

Since these packages are installed from source, they need to be installed in the catkin folder. They can be built with the following command:

cd ~/catkin_ws/src git clone https://github.com/husky/husky.git git clone https://github.com/husky/husky_simulator.git git clone https://github.com/husky/husky_desktop.git cd ~/catkin_ws/ catkin_make

The Clearpath Robotics page on the ROS wiki describes additional drivers and packages that are required for both real world and simulated operation of their various robotic platforms. The following packages are the ones that are required for use with the Husky platform.

- ros_control: A set of packages that include controller interfaces, controller managers, transmissions and hardware_interfaces.

- joint_state_controller: Controller to publish joint states

- diff_drive_controller: Controller for a differential drive mobile base.

- hector_gazebo: Packages related to simulation of robots and environments using gazebo. Currently, this includes gazebo plugins and world files.

- universal_robot: Contains packages that provide nodes for communications with the UR5 robot arm including conrollers, URDF models, and MoveIt packages.

- robot_localization: Provides nonlinear state estimation through sensor fusion of an abritrary number of sensors.

- dwa_local_planner: Provides an implementation of the Dynamic Window Approach to local robot navigation on a plane.

- lms1xx: The lms1xx package contains a basic ROS driver for the SICK LMS1xx line of LIDARs.

- yocs_cmd_vel_mux: A multiplexer for command velocity inputs. Arbitrates incoming cmd_vel messages from several topics, allowing one topic at a time to command the robot, based on priorities.

- interactive_marker_twist_server: Provides a basic generic marker server for teleoperation of twist-based robots, particularly simple differential drive bases.

- teleop_twist_joy: Provides a generic facility for tele-operating Twist-based ROS robots with a standard joystick.

- rviz_imu_plugin: Rviz plugin that is used to display sensor_msgs/Imu messages in Rviz.

These can all be installed as debian packages with the following commands

sudo apt-get install ros-indigo-universal-robot sudo apt-get install ros-indigo-lms1xx sudo apt-get install ros-indigo-yocs-cmd-vel-mux sudo apt-get install ros-indigo-gazebo-ros-control sudo apt-get install ros-indigo-hector-gazebo sudo apt-get install ros-indigo-teleop-twist-joy sudo apt-get install ros-indigo-robot-localization sudo apt-get install ros-indigo-interactive-marker-twist-server sudo apt-get install ros-indigo-joint-state-controller sudo apt-get install ros-indigo-diff-drive-controller sudo apt-get install ros-indigo-dwa-local-planner sudo apt-get install ros-indigo-rviz-imu-plugin

Husky Model Simulation

To ensure the Husky model and the basic dependencies are installed correctly, launch the Husky model in an empty Gazebo world.

roslaunch husky_gazebo husky_empty_world.launch

The launch file will open Gazebo and appear similar to the image below

Next, test the joystick control by running the following commands in separate windows.

roslaunch husky_control teleop.launch roslaunch husky_gazebo husky_empty_world.launch

The robot can be maneuvered using the joystick to change its direction. The user can alternate between two speeds by holding either the “A” or “B” buttons on an Xbox controller.

Finally, test the path planning with a basic move_base setup but no mapping or localization. Run these commands in separate windows.

roslaunch husky_gazebo husky_playpen.launch roslaunch husky_viz view_robot.launch roslaunch husky_navigation move_base_mapless_demo.launch

In Rviz, ensure the Fixed Frame parameter is set to “odom” and the visualizers in the Navigation group are enabled. You can use the 2D Nav Goal tool in the top toolbar to select a movement goal in the visualizer. The Husky robot should automatically navigate to the goal position and orientation. An example of the Rviz and Gazebo visualizations is shown below. The green arrow depicts the goal, the yellow lines represent the LIDAR output, and the blue line behind the Husky represents the previously navigated path.

Husky Model Modification for Yetiborg

After the Husky model is successfully running and integrated with ROS and Gazebo, we need to modify the model parameters to more closely resemble the Yetiborg robot. A list of some of the default environmental variables can be found here. Modifying these parameters will improve the accuracy of the simulation and help ensure that the FormulaPi software performs as well in the real-world races as it does in the simulations. The formulapi_ROS_simulator package contains modified versions of the Husky simulation files and serves as the foundation for the development of the FormulaPi simulation environment.

The image below was used to approximate the dimensions of the Yetiborg robot.

The Yetiborg model is spawned with the spawn_yetiborg.launch file which loads yetiborg_description.gazebo.xacro. This file loads the yetiborg_robot and yetiborg_robot_gazebo macros.

The yetiborg.urdf.xacro file defines the yetiborg_robot macro and is loaded from the yetiborg_description package. Note that this file includes the updated dimensions of the Yetiborg in meters. The x and y dimensions are derived from the base of the Yetiborg. The z dimension is based on the size of the wheel. The wheelbase is the distance between the center of the front and rear wheels. The track variable is the distance between the centers of both rear wheels. The wheel_length value is the width of a wheel while the wheel_radius is the radius.

<!-- Base Size --> <property name="base_x_size" value="0.1250000" /> <property name="base_y_size" value="0.0830000" /> <property name="base_z_size" value="0.0880000" /> <!-- Wheel Mounting Positions --> <xacro:property name="wheelbase" value="0.10500" /> <xacro:property name="track" value="0.1315" /> <xacro:property name="wheel_vertical_offset" value="0.0" /> <!-- Wheel Properties --> <xacro:property name="wheel_length" value="0.042" /> <xacro:property name="wheel_radius" value="0.044" />

Additionally, the base_link.dae mesh file’s scale is modified to reflect the Yetiborg dimensions.

The husky_wheel macro is used to create and modify the four wheels. This macro is defined in the wheel.urdf.xacro file in the yetiborg_description package. Here, the wheel.dae mesh file is scaled for the Yetiborg.

The husky_decorate macro is also referenced which contains information for visualizing other parts of the Husky base such as the top chassis, bumper, and user rail. These are defined in the decorations.urdf.xacro file in the yetiborg_description package. For the Yetiborg, the top_chassis.dae and top_plate.dae mesh files are scaled while the user rail and bumpers are not enabled.

Lastly, the yetiborg.urdf.xacro file defines the parameters for a simple camera. The Husky robot comes pre-configured with simulation models for a UR5 arm, Kinect camera, and a SICK LMS1xx LIDARs but doesn’t include a simple camera. The camera_macro macro is used to set the parameters and position of a simple camera on the robot. This is meant to simulate the Raspberry Pi camera mounter on the Yetiborg robot. This macro is defined in the camera.urdf.xacro also in the yetiborg_description package.

In addition to the yetiborg_robot macro, the yetiborg_description.gazebo.xacro file loads the yetiborg_robot_gazebo macro. The yetiborg_robot_gazebo macro is defined in the yetiborg.gazebo.xacro file in the yetiborg_gazebo package. This file includes the plugins for sensors such as the IMU, GPS, Kinect, and the UR5 robot arm.

To test everything, we can visualize the Yetiborg model in an empty Gazebo world. To do this, run the following command.

roslaunch formulapi_gazebo yetiborg_empty_world.launch

This will result in a Gazebo output similar to the image below.

Track Design

The PiBorg team designed and built the FormulaPi track at their offices. The track is divided up into 6 lanes alternating between red, blue, and green. The colors assist the computer vision algorithms in determining the location of the vehicle on the track. The track consists of 5 turns with a lap length of around 23 meters. The complete dimensions of the track are shown in the image below.

Sketchup Track Design

To recreate the track in the Gazebo simulation environment, the track was drawn in Sketchup. They provide several video tutorials to get started with the software. Using the dimensions provided, the track was drawn in Sketchup. The walls were made to be 0.2 meters tall as described on the FormulaPi website. For the lanes, the textures were taken from this image. The resulting model looks like the image below and can be downloaded from the Sketchup Warehouse.

Simulation in Gazebo

In order to import the track model into Gazebo, it needs to be saved as a .dae file. A copy of formulapi_track_1.dae is available. Next, a world file is developed. The ground plane was removed since it interfered with the track visualization as shown in the resulting fformulapi_track1.world file.

To test the model, the track can be visualized in Gazebo by running the following commands.

roscd formulapi_gazebo cd worlds gazebo formulapi_track1.world

The Gazebo simulation of the FormulaPi track model appears below.

This world can be incorporated into a launch file by including the following code

<include file="$(find gazebo_ros)/launch/empty_world.launch">

<arg name="world_name" value="$(find formulapi_gazebo)/worlds/formulapi_track1.world"/>

</include>

The file formulapi_track1.launch loads the track and spawns the Yetiborg model. It can be launched with the following command

roslaunch formulapi_gazebo formulapi_track1.launch

The resulting Gazebo simulation depicts the FormulaPi track with the Yetiborg model simulated on it.

This image, taken from the PiBorg offices, shows the track in real life for comparison purposes.

FormulaPi Software Integration

The PiBorg team provides all competitors with a common code base. This software contains the minimum amount of functions needed to process images and control the Yetiborg. The software is only available to competitors and is hosted privately on SourceForge. The intent is to keep the software simple enough for entry-level competitors but to allow flexibility for more advanced competitors to modify the software to improve performance.

Creating a FormulaPi Software ROS Package

For the purposes of developing this simulation environment, the FormulaPi code was placed into a separate ROS package outside of the formulapi_ROS_simulator package. This allows those with access to the FormulaPi software to integrate that code base into this simulator while enabling the development of supplemental image processing or vehicle control software to be developed. This software can then be integrated back into the FormulaPi software if desired.

While the FormulaPi package is intended to remain private to those participating in the competition, the formulapi_sitl package was developed in the formulapi_ROS_simulator that relies on FormulaPi functions to demonstrate basic line following and autonomous control with the FormulaPi functions. This requires the FormulaPi software to be compiled in a ROS package.

The FormulaPi software relies on OpenCV for image processing. OpenCV2 was likely installed with the generic ROS Indigo distribution. Additionally, the cv_bridge package converts between ROS Image messages and OpenCV images. This package, along with the image_geometry package, is installed with the following command:

sudo apt-get install ros-indigo-vision-opencv

Launching a FormulaPi SITL Simulation

The file sitl_file.py creates a ROS node which receives the simulated images from the camera, calls the FormulaPi ImageProcessor.py functions to estimate the Yetiborg’s position, and computes a velocity command to send to the Yetiborg to keep it at the desired track position.

In order to ensure that the sitl_file.py is able to use the ImageProcessor.py functions, the formulapi_sitl package was linked to the formulapi_source package which contains the FomrulaPi provided files. The CMakeLists.txt and package.xml files are updated to reference the formulapi_source package.

The sitl_file.py begins by importing several modules, several of which are of note. The cv_bridge module converts between ROS and OpenCV image types. The geometry_msgs module allows us to use the Twist message which is what is required to send commands to the Yetiborg differential drive controller. Finally, the formulapi_source module contains the provided FormulaPi functions

from cv_bridge import CvBridge, CvBridgeError from geometry_msgs.msg import Twist from formulapi_source import ImageProcessor

The file defines the formulapi_sim class. This class defines and initializes several global variables. Of note, it creates an ImageProcessor object to access the FormulaPi ImageProcessor.py functions. It also creates several ROS message subscriptions and publications. One subscriber listens on the “/cam/camera_/image_raw” topic to receive the images from the simulated camera. The post-processed images are published on the “/image_converter/output_video” topic and the desired velocity control commands are sent on the “/platform_control/cmd_vel” topic.

class formulapi_sim(object):

def __init__(self):

self.imgprocess = ImageProcessor.ImageProcessor()

"""ROS Subscriptions """

self.image_pub = rospy.Publisher("/image_converter/output_video",Image, queue_size=10)

self.image_sub = rospy.Subscriber("/cam/camera_/image_raw",Image,self.cvt_image)

self.cmdVelocityPub = rospy.Publisher('/platform_control/cmd_vel', Twist, queue_size=10)

Each time an image is received on the self.image_pub, the data is stored as an Image variable and passed to the cvt_image function. This function converts the image from the ROS Image message format to the OpenCV format and save the image as the self.latestimage global variable. Finally the self.process flag is set which signals that the self.latestimage file is no longer empty.

def cvt_image(self,data):

try:

self.latestimage = self.bridge.imgmsg_to_cv2(data, "bgr8")

except CvBridgeError as e:

print(e)

if self.process != True:

self.process = True

When the node is initialized, a simple loop is run to receive images and publish control commands. The loop currently starts immediately but could be modified to be triggered by some additional flags. In the FormulaPi code, the Yetiborgs begin moving once they have detected the starting lights turning green, for example.

If an image has already been received, the self.process flag is set to “True.” The latest image is processed using the ProcessingImage function. The Yetiborg’s estimated track position is computed from the image and stored in the trackoffset variable. This is passed to the AdjustMotorSpeed function defined in the formulapi_sim class. Lastly, the image is converted back into the ROS Image message format and published.

def run(self):

while True:

# Only run loop if we have an image

if self.process:

self.imgprocess.ProcessingImage(self.latestimage) # FormulaPi Image Processing Function

self.AdjustMotorSpeed(self.imgprocess.trackoffset) # Compute Motor Commands From Image Output

# Publish Processed Image

cvImage = self.imgprocess.outputImage

try:

imgmsg = self.bridge.cv2_to_imgmsg(cvImage, "bgr8")

self.image_pub.publish(imgmsg)

except CvBridgeError as e:

print(e)

The AdjustMotorSpeed function takes the estimated Yetiborg tack position and computes a desired velocity command. Currently the forward velocity, cmdvel.linear.x, is set at 0.2 m/s. This could be modified using a more advanced control system relying on additional input variables. To determine which direction to head to maintain the correct course, the cmdvel.angular.z variable is computed using a simple PI controller. The error is computed between the current estimated Yetiborg trackposition, pos, and the desired position, self.targetlane. The resulting desired velocity command is then published to the Yetiborg controller which determines individual motor speeds.

def AdjustMotorSpeed(self, pos): self.cmdvel.linear.x = 0.2 self.sim_time = rospy.Time.now() self.dt = (self.sim_time - self.last_time).to_sec(); self.position_er = self.targetlane - pos self.cp = self.position_er * self.Kp self.vel_er = (self.position_er - self.position_er_last) * self.dt self.cd = self.vel_er * self.Kd self.cmdvel.angular.z = self.cp - self.cd self.cmdvel.angular.z = self.limit(self.cmdvel.angular.z, -1, 1) self.cmdVelocityPub.publish(self.cmdvel) self.position_er_last = self.position_er self.last_time = self.sim_time

This complete simulation can be run with the linefollow_cam_formulapi_track1.launch launch file. This file loads the formulapi_track1.world Gazebo world file and spawns the Yetiborg model. It then runs the robot_state_publisher node and launches the control.launch file which runs the low-level Yetiborg controller as well as the teleop.launch file which enables user control input from a joystick. Finally, the SITL node is launched from the sitl_file.py file and an image_view node is initialized to view the post-processed image stream.

<!-- Run FormulaPi Software In The Loop Node -->

<node name="formulapi_sitl" pkg="formulapi_sitl" type="sitl_file.py" output="screen" />

<!-- spawn image viewer for camera -->

<node pkg="image_view" type="image_view" name="image_view" >

<remap from="image" to="/image_converter/output_video" />

</node>

The simulation is run using the following command.

roslaunch formulapi_gazebo linefollow_cam_formulapi_track1.launch

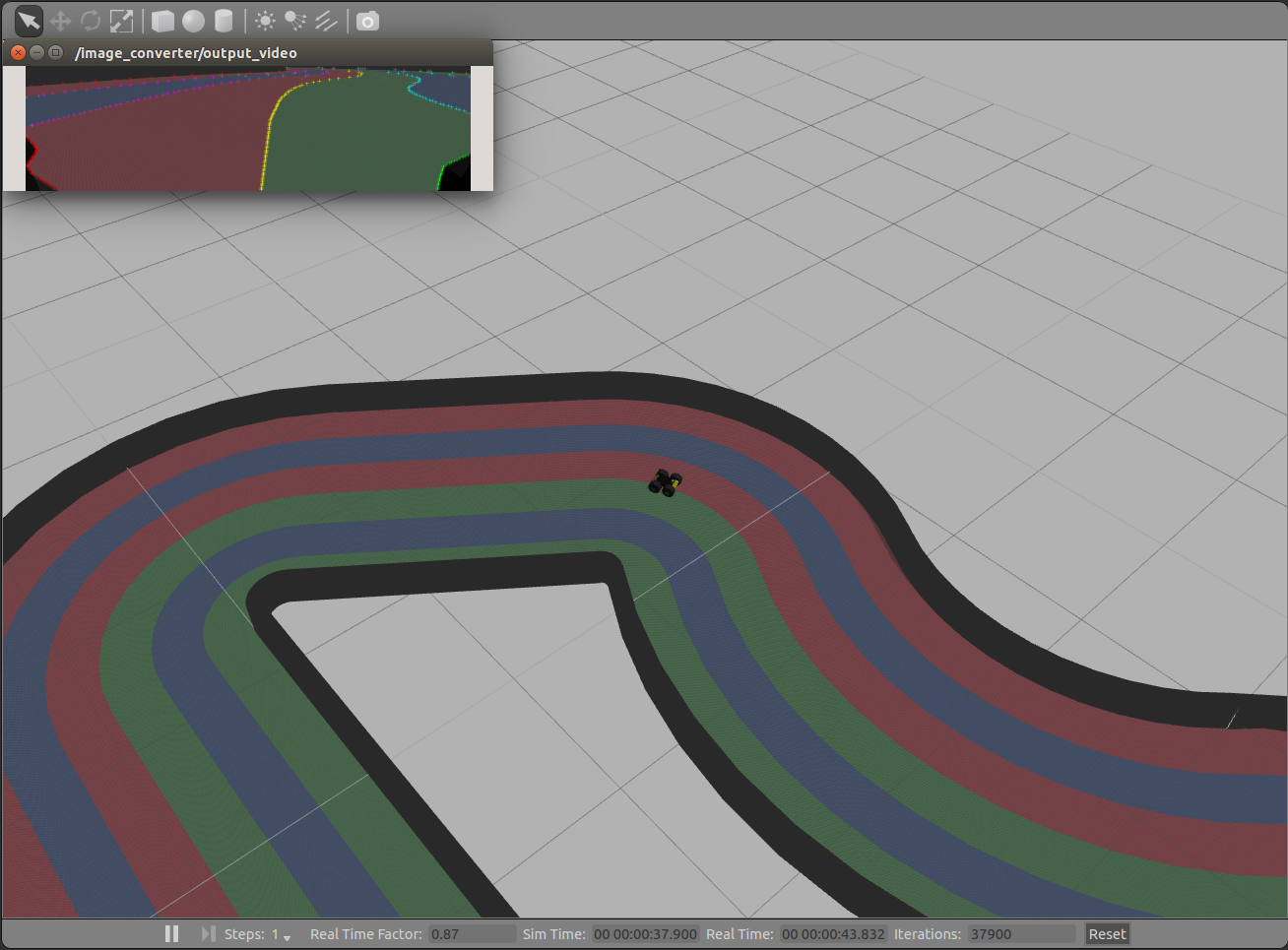

Once the simulation is run, the Yetiborg will move forward, maintaining the desired track position around the track while the computer vision output is displayed. An example screenshot is shown below.

Additionally, a video summarizing this development was created and is displayed below.

[embedyt] http://www.youtube.com/watch?v=rVsPItHA0xw%5B/embedyt%5D

Hello, there is a typo in : sudo apt-get install ros-indigo-universal_robot ; it is sudo apt-get install ros-indigo-universal-robot

Great simulation tutorial, currently going throught it for a school course. I’ll have to see how put a pilot in the car and manage public peoples on terraces around the track.

Thanks !

Same for sudo apt-get install ros-indigo-robot_localization -> sudo apt-get install ros-indigo-robot-localization

Thanks for this and good luck!

Hi,

I tried the following command to launch the husky_empty_world file

roslaunch husky_gazebo husky_empty_world.launch

but I get an error:

No such file or directory:

XacroException(‘No such file or directory: ‘,)

while processing /home/tsc-ns-vm/catkin_ws/src/husky_simulator/husky_gazebo/launch/spawn_husky.launch:

Invalid tag: Cannot load command parameter [robot_description]: command [/opt/ros/kinetic/share/xacro/xacro.py ” laser_enabled:=true ur5_enabled:=false kinect_enabled:=false ] returned with code [2].

Param xml is

The traceback for the exception was written to the log file

Hi, Can you tell me how to get the FormulaPi software package or run your simulator without it? The formulapi_ROS_simulator generates errors with a catkin_make because of its dependencies on the FormulaPi software thereby making the package unusable.

— Could not find the required component ‘formulapi_source’. The following CMake error indicates that you either need to install the package with the same name or change your environment so that it can be found.